This guide to ETL alternatives is continuously evolving. Conversations can be had on the Estuary Slack workspace and Estuary LinkedIn pages.

This guide is meant for three audiences:

- Companies focused on lowering latency when loading a cloud data warehouse

- Companies implementing real-time analytics using specialized databases

- Companies starting to implement their first AI projects

Key Data Integration Methods to Consider

There are three data integration alternatives to consider:

- ELT (Extract, Load, and Transform) - Most ELT technologies are newer, built within the last 10-15 years. ELT is focused on running transforms using dbt or SQL in cloud data warehouses. It’s mostly multi-tenant SaaS, with some open source options as well. If you’re interested in ELT vendors you can read The Data Engineer’s Guide to ELT Alternatives, which is part 2 of this series.

- ETL (Extract, Transform, and Load) - ETL started to become popular 30 years ago as the preferred way to load data warehouses. Over time it was also used for data integration and data migration as well. This is the focus of this guide.

- CDC (Change Data Capture) - CDC is really a subset of ETL and ELT, but technologies like Debezium can be used to build your own custom data pipeline. If you’re interested in CDC you can read The Data Engineer’s Guide to CDC for Analytics, Ops, and AI Pipelines, which is part 1 of this series.

Why ETL Still Matters

ETL was the first version of these technologies for loading data warehouses. When cloud data warehouses entered the market, ETL’s popularity declined. That’s in part because modern ELT was built specifically for loading BigQuery, Snowflake, and Redshift originally. Pay-as-you-go cloud data warehouses, and pay-as-you-go ELT with data warehouses running the “T” as dbt or SQL, made it easy to get started.

But ETL continued to be used in global 2000 companies and for other projects. Data integration with other destinations usually requires ETL and real-time data movement. Companies who have regulatory and security requirements require private cloud or on premises deployments. Most have historically opted for ETL for these reasons.

Top ETL Alternatives for 2024

This ETL guide compares the following ETL vendors:

- Estuary

- Informatica

- Matillion

- Rivery (ELT/ETL)

- Talend

If you’re interested in comparing ELT vendors you’ll find them in the ELT guide.

While there is a summary of each vendor, an overview of their architecture, and when to consider them, the detailed comparison is best seen in the comparison matrix, which covers the following categories:

- 9 Use cases - database replication, replication for operational data stores (ODS), historical analytics, data integration, data migration, stream processing, operational analytics, data science and ML, and AI

- Connectors - packaged, CDC, streaming, 3rd party support, SDK, and APIs

- Core features - full/incremental snapshots, backfilling, transforms, languages, streaming+batch modes, delivery guarantees, backfilling, time travel, data types, schema evolution, DataOps

- Deployment options - public (multi-tenant) cloud, private cloud, and on-premises

- The “abilities” - performance, scalability, reliability, and availability

- Security - including authentication, authorization, and encryption

- Costs - Ease of use, vendor and other costs you need to consider

Over time, we will also be adding more information about other vendors that at the very least deserve honorable mention, and at the very best should be options you consider for your specific needs. Please speak up if there are others to consider.

It’s OK to choose a vendor that’s good enough. The biggest mistakes you can make are:

- Choosing a good vendor for your current project but the wrong vendor for future needs

- Not understanding your future needs

- Not insulating yourself from a vendor

Make sure to understand and evaluate your future needs and design your pipeline for modularity so that you can replace components, including your ELT/ETL vendor, if necessary.

Hopefully by the end of this guide you will understand the relative strengths and weaknesses of each vendor, and how to evaluate these vendors based on your current and future needs.

Comparison Criteria

This guide starts with the detailed comparison before moving into an analysis of each vendor’s strengths, weaknesses, and when to use them.

The comparison matrix covers the following categories:

Use cases - Over time, you will end up using data integration for most of these use cases. Make sure you look across your organization for current and future needs. Otherwise you might end up with multiple data integration technologies, and a painful migration project.

- Replication - Read-only and read-write load balancing of data from a source to a target for operational use cases. CDC vendors are often used in cases where built-in database replication does not work.

- Operational data store (ODS) - Real-time replication of data from many sources into an ODS for offloading reads or staging data for analytics.

- Historical analytics - The use of data warehouses for dashboards, analytics, and reporting. CDC-based ETL or ELT is used to feed the data warehouse.

- Operational data integration - Synchronizing operational data across apps and systems, such as master data or transactional data, to support business processes and transactions.

NOTE: none of the vendors in this evaluation support many apps as destinations. They only support data synchronization for the underlying databases. - Data migration - This usually involves extracting data from multiple sources, building the rules for merging data and data quality, testing it out side-by-side with the old app, and migrating users over time. The data integration vendor used becomes the new operational data integration vendor.

NOTE: ELT vendors only support database destinations for data migration. - Stream processing - Using streams to capture and respond to specific events.

- Operational analytics - The use of data in real-time to make operational decisions. It requires specialized databases with sub-second query times, and usually also requires low end-to-end latency with sub-second ingestion times as well. For this reason the data pipelines usually need to support real-time ETL with streaming transformations.

- Data science and machine learning - This generally involves loading raw data into a data lake that is used for data mining, statistics and machine learning, or data exploration including some ad hoc analytics. For data integration vendors this is very similar to loading data lakes/lakehouses/warehouses.

- AI - the use of large language models (LLM) or other artificial intelligence and machine learning models to do anything from generating new content to automating decisions. This usually involves different data pipelines for model training and model execution including Retrieval Augmented Generation (RAG).

Connectors - The ability to connect to sources and destinations in batch and real-time for different use cases. Most vendors have so many connectors that the best way to evaluate vendors is to pick your connectors and evaluate them directly in detail.

- Number of connectors - The number of source and destination connectors. What’s important is the number of high-quality and real-time connectors, and that the connectors you need are included. Make sure to evaluate each vendor’s specific connectors and their capabilities for your projects. The devil is in the details.

- Streaming (CDC, Kafka) - All vendors support batch. The biggest difference is how much each vendor supports CDC and streaming sources and destinations.

- Destinations - Does the vendor support all the destinations that need the source data, or will you need to find another way to load for select projects?

- Support for 3rd party connectors - Is there an option to use 3rd party connectors?

- CDK - Can you build your own connectors using a connector development kit (CDK)?

- API - Is an admin API available to help integrate and automate pipelines?

Core features - How well does each vendor support core data features required to support different use cases? Source and target connectivity are covered in the Connectors section.

- Batch and streaming support - Can the product support streaming, batch, and both together in the same pipeline?

- Transformations - What level of support is there for streaming and batch ETL and ELT? This includes streaming transforms, and incremental and batch dbt support in ELT mode. What languages are supported? How do you test?

- Delivery guarantees - Is delivery guaranteed to be exactly once and in order?

- Data types - Support for structured, semi-structured, and unstructured data types.

- Store and replay - The ability to add historical data during integration, or later additions of new data in targets.

- Time travel - The ability to review or reuse historical data without going back to sources.

- Schema evolution - support for tracking schema changes over time, and handling it automatically.

- DataOps - Does the vendor support multi-stage pipeline automation?

Deployment options - does the vendor support public (multi-tenant) cloud, private cloud, and on-premises (self-deployed and managed)?

The “abilities” - How does each vendor rank on performance, scalability, reliability, and availability?

- Performance (latency) - what is the end-to-end latency in real-time and batch mode?

- Scalability - Does the product provide elastic, linear scalability

- Reliability - How does the product ensure reliability for real-time and batch modes? One of the biggest challenges, especially with CDC, is ensuring reliability.

Security - Does the vendor implement strong authentication, authorization, RBAC, and end-to-end encryption from sources to targets?

Costs - the vendor costs, and total cost of ownership associated with data pipelines

- Ease of use - The degree to which the product is intuitive and straightforward for users to learn, build, and operate data pipelines.

- Vendor costs - including total costs and cost predictability

- Labor costs - Amount of resources required and relative productivity

- Other costs - Including additional source, pipeline infrastructure or destination costs

Comparison Matrix for ETL Alternatives

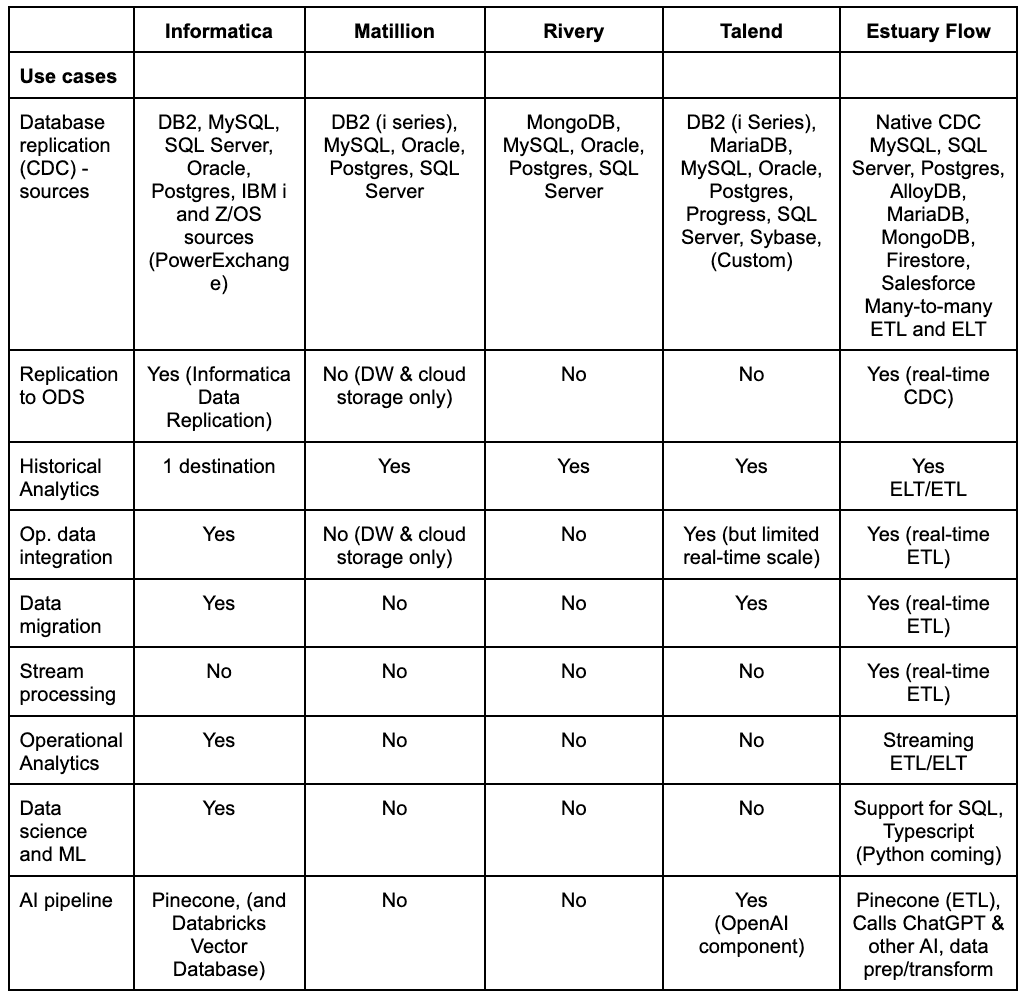

Informatica | Matillion | Rivery | Talend | Estuary Flow | |

|---|---|---|---|---|---|

| Use cases | |||||

| Database replication (CDC) - sources | DB2, MySQL, SQL Server, Oracle, Postgres, IBM i and Z/OS sources (PowerExchange) | DB2 (i series), MySQL, Oracle, Postgres, SQL Server | MongoDB, MySQL, Oracle, Postgres, SQL Server | DB2 (i Series), MariaDB, MySQL, Oracle, Postgres, Progress, SQL Server, Sybase, (Custom) | Native CDC MySQL, SQL Server, Postgres, AlloyDB, MariaDB, MongoDB, Firestore, Salesforce Many-to-many ETL and ELT |

| Replication to ODS | Yes (Informatica Data Replication) | No (DW & cloud storage only) | No | No | Yes (real-time CDC) |

| Historical Analytics | 1 destination | Yes | Yes | Yes | Yes |

| Op. data integration | Yes | No (DW & cloud storage only) | No | Yes (but limited real-time scale) | Yes (real-time ETL) |

| Data migration | Yes | No | No | Yes | Yes (real-time ETL) |

| Stream processing | No | No | No | No | Yes (real-time ETL) |

| Operational Analytics | Yes | No | No | No | Streaming ETL/ELT |

| Data science and ML | Yes | No | No | No | Support for SQL, Typescript |

| AI pipeline | Pinecone, (and Databricks Vector Database) | No | No | Yes | Pinecone (ETL), Calls ChatGPT & other AI, data prep/transform |

Informatica | Matillion | Rivery | Talend | Estuary Flow | |

|---|---|---|---|---|---|

| Connectors | |||||

| Number of connectors | 300+ connectors | 120+ | 200+ | 50+ managed connectors | 150+ high performance connectors built by Estuary |

| Streaming connectors | Yes CDC, Kafka via PowerExchange | Very limited. No Kafka, Kinesis. PubSub, SQS, CDC deprecated | CDC only | Yes. CDC, Kafka, Kinesis, Azure Storage Queue, PubSub, RabbitMQ, AMQP, JMS, MQTT | Streaming CDC, Kafka, Kinesis (source only) |

| Support for 3rd party connectors | No | No | No | No | Support for 500+ Airbyte, Stitch, and Meltano connectors |

| Custom SDK | Yes Informatica Connector Toolkit | Yes. Custom connectors (API/JSON only) and Flex (preconfigured) | Yes (REST) | Yes | Yes |

| API (for admin) | No | Yes (moving to new API) | Yes | Yes Estuary API docs |

Informatica | Matillion | Rivery | Talend | Estuary Flow | |

|---|---|---|---|---|---|

| Core features | |||||

| Batch and streaming | Streaming to batch, batch to streaming | Mostly batch. Limited streaming | Batch-only destinations | Streaming and batch support | Streaming to batch Batch to streaming |

| ETL Transforms | Yes. PowerCenter | Yes. Matillion, SQL, and dbt support | Python (ETL or ELT). SQL runs in target (ELT). | Yes. tMaps transformations. SQL function. Works with dbt | SQL & TypeScript (Python Q124). |

| Workflow | Yes PowerCenter | Yes | Yes | Yes | Many-to-many pub-sub ETL |

| ELT transforms | dbt, SQL, pushdown optimization | SQL, dbt (Matillion ETL only) | Yes (SQL, Python) | Dbt only. | Dbt. Integrated orchestration. |

| Delivery guarantee | Exactly once | Exactly once | Exactly once | Exactly once. | Exactly once (streaming, batch, mixed) |

| Load write method | Soft and hard deletes, append and update in place | Soft and hard deletes, append and update in place (with work) | Soft and hard deletes, append and update in place | Soft and hard deletes, append and update in place (with work) | Soft and hard deletes, append and update in place |

| Store and replay | No | No | No | No | Yes. Can backfill multiple targets and times without requiring new extract. |

| Time travel | No | No | No | No | Yes |

| Schema inference and drift | Yes, with limits | Limited. New tables, fields not loaded automatically | Limited to detection in database sources | Not without coding, but can be done. | Good schema inference, automating schema evolution |

| DataOps support | Yes (CLI, | Limited, and cloud only | Yes (CLI, API) | Yes (CLI, API) | CLI, API, |

Informatica | Matillion | Rivery | Talend | Estuary Flow | |

|---|---|---|---|---|---|

| Deployment options | On premises, private cloud, public cloud | On premises (ETL), SaaS is different. | Public cloud only (mulit-tenant) | On premises (self-hosted), private cloud, public cloud | Open source, Public cloud, private cloud |

| The abilities | |||||

| Performance (minimum latency) | Sub-second | Mostly batch. Limited real-time with CDC deprecation. | Minutes (60 minutes starter, 15 minutes professional, 5 minutes enterprise minimum) | Sub-second loading at low volumes. Requires bulk mode to scale. | < 100 ms (in streaming mode) Supports any batch interval as well and can mix streaming and batch in 1 pipeline. |

| Scalability | High | High, with work | Med-High | High but requires bulk-mode loading | High |

| Reliability | High | High | High | High | High |

| Security | |||||

| Data Source Authentication | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth / HTTPS / SSH / SSL / API Tokens | OAuth 2.0 / API Tokens |

| Encryption | Encryption at rest, in-motion | Encryption in motion (doesn’t store data) | Encryption at rest, in-motion | Encryption at rest, in-motion | Encryption at rest, in-motion |

Informatica | Matillion | Rivery | Talend | Estuary Flow | |

|---|---|---|---|---|---|

| Support | High Known for good support | High | Med-High | High | High |

| Costs | |||||

| Vendor costs | High | High | Medium - Low for small volumes (< 20 GB a month) | High | Low |

| Data engineering costs | High | High - steep learning curve and requires work to implement features like upserts | Med - requires some learning for building pipelines and transforms. | High - steep learning curve and requires work to implement features like upserts | Low-Med |

| Admin costs | High | High | Medium | High | Low |

Estuary

Estuary was founded in 2019. But the core technology, the Gazette open source project, has been evolving for a decade within the Ad Tech space, which is where many other real-time data technologies have started.

Estuary Flow and Rivery are the only two modern data pipeline tools in this comparison. Unlike Rivery and the others, while Flow is also a great option for batch sources and targets, where Flow really shines is any combination change data capture (CDC), real-time and batch ETL or ELT, and loading multiple destinations with the same pipeline.

Estuary Flow has a unique architecture where it streams and stores streaming or batch data as collections of data, which are transactionally guaranteed to deliver exactly once from each source to the target. With CDC it means any (record) change is immediately captured once for multiple targets or later use. Estuary Flow uses collections for transactional guarantees and for later backfilling, restreaming, transforms, or other compute. The result is the lowest load and latency for any source, and the ability to reuse the same data for multiple real-time or batch targets across analytics, apps, and AI, or for other workloads such as stream processing, or monitoring and alerting.

Pros

- Modern data pipeline: Estuary Flow has the best support for schema drift, evolution, and automation, as well as modern DataOps.

- Modern transforms: Flow is also both low-code and code-friendly with support for SQL, TypeScript (and Python coming) for ETL, and dbt for ELT.

- Lowest latency: Estuary several ETL vendors support low latency. But of these Estuary can achieve the lowest, with sub-100ms latency.

- High scale: Unlike most ELT vendors, leading ETL vendors do scale. Estuary is proven to scale with one production pipeline moving 7GB+/sec at sub-second latency.

- Most efficient: Estuary alone has the fastest and most efficient CDC connectors. It is also the only vendor to enable exactly-and-only-once capture, which puts the least load on a system, especially when you’re supporting multiple destinations including a data warehouse, high performance analytics database, and AI engine or vector database.

- Deployment options: Of these ETL vendors, Estuary is currently the only vendor to offer open source, private cloud, and public multi-tenant SaaS.

- Reliability: Estuary’s exactly-once transactional delivery and durable stream storage makes it very reliable.

- Ease of use: Estuary is one of the easiest to use tools. Most customers are able to get their first pipelines running in hours and generally improve productivity 4x over time.

- Lowest cost: for data at any volume, Estuary is the clear low-cost winner in this evaluation. Rivery is second.

- Great support: Customers consistently cite great support as one of the reasons for adopting Estuary.

Cons

- On premises connectors: Estuary has 150+ native connectors and supports 500+ Airbyte, Meltano, and Stitch open source connectors. But if you need on premises app or data warehouse connectivity make sure you have all the connectivity you need.

- Graphical ETL: Estuary has been more focused on SQL and dbt than graphical transformations. While it does infer data types and convert between sources and targets, there is currently no graphical transformation UI.

Pricing

Of the various ELT vendors, Estuary is the lowest total cost option. Rivery is the next lowest-cost option, approaching 10x or higher costs at larger scales for pay-as-you-go pricing before negotiations for enterprise pricing. Both lower than other ETL vendors.

Estuary only charges $0.50 per GB of data moved from each source or to each target, and $100 per connector per month. So you can expect to pay a minimum of a few thousand per year. But it quickly becomes the lowest cost pricing. Rivery, the next lowest cost option, is the only other vendor that publishes pricing of 1 RPU per 100MB, which is $7.50 to $12.50 per GB depending on the plan you choose. Estuary becomes the lowest cost option by the time you reach the 10s of GB/month. By the time you reach 1TB a month Estuary is 10x lower cost than the rest.

Informatica

Informatica started as one of the first ETL vendors with Powercenter, in 1993. It then released one of the first cloud integration products, Informatica Cloud, in 2006. Informatica Cloud was originally built based on an older version of Informatica PowerCenter. After PowerCenter started to get replaced by a new Hadoop (Spark)-based framework, Informatica Cloud eventually moved over as well. After being taken private by Permira in 2015 and having a long period as a private company, Informatica became publicly traded again in 2021.

Informatica is perhaps the best example of a mature data integration platform. While it was one of the first to make the transition to the cloud and has one of the strongest and broadest data integration feature sets, it is harder to use, more expensive, and not as DataOps-native. But it has great enterprise features and one of the better private cloud architectures.

Pros

- A comprehensive data management platform: Informatica Intelligent Data Management Cloud is much more than ETL-based data integration. It includes dozens of options including replication, data quality and master data management.

- Rich data integration functionality: Informatica has developed a rich library of capabilities over the years for data integration.

- Great connectors: Over 300 connectors, including proven connectors to high-performance on premises and cloud data warehouses.

- Performance and scalability: Informatica is built to support large deployments and deliver low latency data pipelines at scale. Informatica has supported serverless compute, pipeline partitioning, push-down optimization, and other features for years.

- Private cloud: Informatica is one of the few vendors that supports a private data plane deployment managed by a shared SaaS control plane.

Cons

- Harder to learn: While Informatica Cloud is easier than Powercenter was, it still has a significant learning curve compared to most SaaS ELT services. This makes it more suitable for larger, specialized data integration teams.

- Doesn’t support DataOps as well: Informatica Cloud was built pre-CI/CD and DataOps. While you can use its CLI and API to automate deployment, it’s not as easy to automate. Schema evolution is supported, but there are some limitations depending on the source and destination. Versioning and other tasks are harder than some of the more modern ELT tools.

- Higher vendor costs: Informatica is more expensive than most other ELT and ETL vendors.

Pricing

Informatica’s consumption-based pricing is complicated and requires a quote. You can read the Informatica Cloud and Product Description Schedule here. Cloud is mostly based on hourly pricing per compute units, with some other pricing like row-based pricing for CDC-based replication. In general, you can expect a higher cost compared to most other vendors.

Matillion

Matillion ETL is an on-premises ETL platform that was founded before the advent of cloud data warehouses, and is still primarily on premises. But its main destinations today are cloud data warehouses such as Snowflake, Amazon Redshift, and Google BigQuery.Matillion combines many features to extract, transform, and load (ETL) data. More recently Matillion has been adding cloud options as part of the Matillion Data Productivity Cloud. It consists of a Hub for administration and billing, a choice of working with the on-premises Matillion ETL deployed as “private cloud” or Matillion Data Loader, a free cloud batch and CDC replication tool built on Matillion ETL but lacking many of its capabilities including transforms.

As with most of the mature ETL tools, Matillion has a strong set of features, but is harder to learn and use and is more expensive.

Pros

Perhaps one of the biggest advantages of Matillion is its ETL and orchestration, especially when compared to various ELT tools.

- Advanced transforms: Matillion ETL supports a variety of transform options, from drag-and-drop to code editors for complex transformations.

- Orchestration: Matillion offers advanced graphical workflow design and orchestration.

- Pushdown optimization: Matillion ETL can push down transformations to the target data warehouse.

- Reverse ETL: Matillion provides the ability to extract data from a source, cleanse it, and insert data back into the source.

Cons

- SaaS: Matillion ETL, its flagship product, is on-premises only. It does offer Data Loader, which is built on ETL, as a free cloud service for replication. There is also integration between Matillion ETL and the Matillion Cloud Hub for billing. While you can migrate work in Data Loader to ETL if you choose, it is a migration from the cloud to your own managed environment.

- Free tier: Matillion Data Loader is free, but it’s limited and doesn’t support transforms. This can make it challenging to fully evaluate the tool before committing to a paid plan.

- Connectors: Matillion has fewer connectors than most (120+ in total.) You can invoke external APIs to access other systems, but access to all your sources and destinations can become an issue. Matillion is only used for loading data warehouses.

- No CDC: Matillion ETL CDC, which was based on Amazon DMS (in turn based on Attunity) has been deprecated. So right now there is no CDC option with Matillion.

- Schema evolution: Matillion does support adding columns to existing destination tables, deleting a column, and handling data type changes as sources change. But adding a table requires creating a new pipeline and there is no automation for schema evolution.

- dbt integration for SaaS: While Matillion ETL has a connector for dbt, there is no integration between Data Loader and dbt.

- Pricing: Compared to more modern ELT vendors, Matillion is expensive. It starts at $1000/month for 500 credits where each credit is a virtual core-hour similar to an AWS, Azure, or Google virtual core. This is really in the $1000s per month minimum. Data productivity Cloud consumes a credit per running task every 15 minutes, and only consumes when tasks are running. The smallest ETL unit is two cores, which means you consume 2 cores an hour, or nearly 3x the 500 credits every month.

Pricing

Matillion doesn’t have a pay-as-you-go model. It starts at $1000/month for 500 credits where each credit is a virtual core-hour similar to an AWS, Azure, or Google virtual core. Pricing increases 25% per credit for advanced and 35% for enterprise with higher base commitments.

This is really in the $1000s per month minimum. Data productivity Cloud consumes a credit per running task every 15 minutes, and only consumes when tasks are running. The smallest ETL unit is two cores, which means you consume 2 cores an hour, or nearly 3x the 500 credits every month.

Rivery

Rivery was founded in 2019. Since then it has grown to 100 people and 350+ customers. It’s a multi-tenant public cloud SaaS ELT platform. It has some ETL features, including inline Python transforms and reverse ETL. It supports workflows and can also load multiple destinations.

But Rivery is also similar to batch ELT. There are a few cases where Rivery is real-time at the source, such as with CDC, which is its own implementation. But even in that case it ends up being batch because it extracts to files and uses Kafka for file streaming to destinations which are then loaded in minimum intervals of 60, 15, and 5 minutes for the starter, professional, and enterprise plans.

If you’re looking for some ETL features and are OK with a public cloud-only option, Rivery is an option. It is less expensive than many ETL vendors, and also less expensive than Fivetran. But its pricing is medium-high for an ELT vendor.

Pros

- Modern data pipelines: Rivery is the one other modern data pipeline platform in this comparison along with Estuary.

- Transforms: You have an option of running Python (ETL) or SQL (ELT). You do need to make sure you use destination-specific SQL.

- Orchestration: Rivery lets you build workflows graphically.

- Reverse ETL: Rivery also supports reverse ETL.

- Load options: Rivery supports soft deletes (append only) and several update-in-place options including switch-merge (to merge updates from an existing table and switch), delete-merge (to delete older versions of rows), and a regular merge.

- Costs: Rivery is lower cost compared to other ETL vendors and Fivetran, though it is still higher than several ELT vendors.

Cons

- Batch only: While Rivery does extract from its CDC sources in real-time, which is the best approach, it does not support messaging sources or destinations, and only loads destinations in minimum intervals of 60 (Starter), 15 (Professional), or 5 (Enterprise) minutes.

- Data warehouse focus: While Rivery supports Postgres, Azure SQL, email, cloud storage, and a few other non data warehouse destinations, Rivery’s focus is data warehousing. It doesn’t support the other use cases as well.

- Public SaaS: Rivery is public cloud only. There is no private cloud or self-hosted option.

- Limited schema evolution: Rivery had good schema evolution support for its database sources. But the vast majority of its connectors are API-based, and those do not have good schema evolution support.

Pricing

Rivery charges per credit, which is $0.75 for Starter, $1.25 for Professional, and negotiated for Enterprise. You pay 1 credit per 100MB of moved data from databases, and 1 credit per API call. There is no charge for connectors. If you have low data volumes this will work well. But by the time you’re moving 20GB per month it starts to get more expensive than some others.

Talend

Talend, also now part of Qlik, has two main products—Talend Data Fabric and Stitch, which is ELT. Talend Data Fabric is a data integration platform that, like Informatica, is broader than ETL. It also includes data quality and data governance capabilities.

Talend also had an open-source solution, Talend Open Studio, that could help you kickstart your first data integration and ETL projects. It has been discontinued by Qlik in 2024.

You could use Talend Open Studio for data processes that require lightweight workflows. For the majority of enterprise data pipelines should consider Data Fabric.

Pros

- ETL platform: Data Fabric has rich transformation, data mapping, and data quality features that help with building data pipelines.

- Real-time and batch: Real-time support includes streaming CDC. While it’s mature technology, it is still real-time.

- Strong monitoring and analytics: Like Informatica, Talend has built up good visibility for operations.

Cons

- Learning curve: Talend has an older UI that takes time to learn, just like some other ETL tools. Building transforms can take time.

- Limited Open Studio features: while Open Studio is free, it’s also limited. Other open source options are less limited in their capabilities.

- Limited connectors: Talend claims 1000+ connectors. But it lists 50 or so databases, file systems, applications, messaging, and other systems it supports. The rest are Talend Cloud Connectors, which you create as reusable objects.

- High costs: there is no pricing listed, but it costs more than most pay-as-you-go tools, as well as Stitch.

Pricing

Outside of open source Open Studio and Singer, pricing quotes are available upon request. This should give you a sense that it’s going to be higher cost than Estuary, Rivery, and several of the pay-as-you-go ELT vendors, poissibly with the exception of Fivetran.

How to choose the best option

For the most part, if you are interested in a cloud option, and the connectivity options exist, you may choose to evaluate Estuary.

- Modern data pipeline: Estuary has the broadest support for schema evolution and modern DataOps.

- Lowest latency: If low latency matters, Estuary will be the best option, especially at scale.

- Highest data engineering productivity: Estuary and Rivery are the easiest to use.

- Connectivity: if you’re more concerned about cloud services, Estuary and Rivery may be your best option. If you need more on premises connectivity, Informatica and Talend may be better. Informatica will have the most advanced on premises support.

- Lowest cost: Estuary is the clear low cost winner for medium and larger deployments.

- Streaming support: Estuary has a modern approach to CDC that is built for reliability and scale, and great Kafka support as well. It is arguability the best of all the options here. Matillion has deprecated their CDC support, and Rivery only has CDC support. It does not support messaging. Informatica and Talend also have good CDC and messaging support.

- Data governance tooling: Governance is important and not to be overlooked. If you’re looking for governance tooling from the same vendor, Informatica is the best, then Talend. Otherwise you can work with most of these vendors.

Ultimately the best approach for evaluating your options is to identify your future and current needs for connectivity, key data integration features, and performance, scalability, reliability, and security needs, and use this information to a good short-term and long-term solution for you.

Getting Started with Estuary

Getting started with Estuary is simple. Sign up for a free account.

Make sure you read through the documentation, especially the get started section.

I highly recommend you also join the Slack community. It’s the easiest way to get support while you’re getting started.

If you want introduction and walk-through of Estuary, you can watch the Estuary 101 Webinar.

Questions? Feel free to contact us any time!

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles